How Audio Deepfakes Trick Employees (And Moms)

In 1989, Mel Blanc, the originator and primary performer of Bugs Bunny, passed away. This left Warner Brothers with a major casting problem. Mel’s performance was pretty distinctive, and finding someone who could do a perfect Bugs (the wry nasal quality, the vague New York accent) seemed an impossible task.

Until Jeff Bergman brought two tapes to his audition. One featured Mel Blanc voicing Bugs, while on the other Bergman did his version of the rascally rabbit. In the audition, Bergman played the two recordings and asked if anyone in the room could tell the difference. Nobody could, and Bergman got the job.

30 years later, another gifted impressionist managed to mimic their way to a paycheck. In 2019, the CEO of a U.K. energy firm received a call from his boss ordering the urgent transfer of $243,000 to a Hungarian supplier. He recognized the slight German accent and timbre of his boss’s voice, and duly transferred the money to the bank account given.

It wasn’t long before he learned that, like those Warner Brothers Executives, he’d been fooled by a copycat. Only in this case, the impressionist was an algorithm, and the cost of this AI voice cloning attack was embarrassingly steep.

In September of this year, the FBI, National Security Agency, and Cybersecurity and Infrastructure Security Agency released a joint 18-page document warning of the growing risk AI deepfakes pose to organizations, as well as describing a few recent deepfake attacks. While many of these attacks were unsuccessful, they indicate a growing trend of bad actors harnessing generative AI tools to go after businesses.

The role that phone calls have played in several recent hacks indicates that voice cloning isn’t just being used by small-time scammers committing wire fraud; it’s being deployed in complex phishing attacks by sophisticated threat actors.

Anatomy of a Deepfake Voice Attack

On August 27, 2023, Retool suffered a spear phishing attack which resulted in the breach of 27 client accounts. Retool described the attack in a blog post, detailing a plan that had more layers than an Ari Aster movie.

Retool employees received texts informing them of an issue with their insurance enrollment. The texts included a url designed to look like their internal identity portal.

One employee (because it only takes one!) logged into the false portal, including its MFA form.

The attacker called the employee after the login. Here’s a quote from Retool, emphasis mine: “The caller claimed to be one of the members of the IT team, and deepfaked our employee’s actual voice.” (It’s worth noting that this is their only mention of the deepfake element of the attack.)

During the conversation, the employee provided the attacker with an additional MFA code. Retool notes that, “The voice was familiar with the floor plan of the office, coworkers, and internal processes of the company.”

This code allowed the attacker to add their personal device to the employee’s Okta account, thereby allowing them to produce their own MFAs. From there– due in no small part to Google’s Authenticator synchronization feature that syncs MFA codes to the cloud–the attacker was able to start an active GSuite session.

Again, a plan with a lot of layers! It goes from smishing (a text), to phishing (a link), to good old fashioned vishing (a call). Rather than being the main tool of attack, as in the U.K. example, the deepfake element of the Retool leak is more like one particularly interesting knife in a whole arsenal.

In fact, Retool’s almost off-handed mention of voice deepfaking had Dan Goodin of Ars Technica speculating that the “completely unverified claim—that the call used an AI-generated deepfake simulating the voice of an actual Retool IT manager—may be a similar ploy to distract readers as well.”

If it is a ploy, it’s a successful one, since the artificial intelligence element of the story drew a lot of attention (and potentially distracted from Retool’s larger point that Google’s MFA policies were at fault). Regardless of its veracity, the role deepfaking plays in the narrative raises some questions:

How easy is it for someone to be audio cloned?

How likely are users to be fooled by a voice deepfake?

How concerned should security teams be about this?

How To Clone/ Deepfake a Voice

Let’s start with the first question, since understanding the process that goes into creating an audio clone of someone can help us to better understand the likelihood of any one person (be it yourself, your boss, or a random IT worker) being deepfaked.

Audio deepfakes are created by uploading recorded audio of someone’s voice to a program which generates an audio “clone” of them through AI analysis that fills in blanks like new words, emotional affect, and accent.

This voice “clone” is used either through text-to-speech (you type, and it reads the text out loud in the cloned voice) or speech-to-speech (you speak, and it converts the audio into the cloned voice). The growing ease and availability of speech-to-speech is a particular concern, since it makes real-time conversations easier to fake.

How much audio do you need to make a voice deepfake?

We’ll start by saying that the fearmongering and ad copy claiming “only seconds of your voice needed!” doesn’t seem to be that realistic, at least not if someone is trying to create a high enough quality voice clone to pull off an attack.

Resemble AI, for instance, markets themself as being able to “clone a voice with as little as 3 minutes of data.” However, in their description of their supported datasets, they state: “we recommend uploading at least 20 minutes of audio data.”

ElevenLabs, meanwhile, a popular text-to-speech company, offers “Instant Voice Cloning” with only one minute of audio. For “Professional Voice Cloning,” meanwhile, the minimum they recommend is 30 minutes of audio, with 3 hours being “optimal.”

Essentially, 3 minutes probably can make a clone, but it’s not likely to be a clone that meets the demands of, say, calling a man and impersonating his German boss in a real-time conversation. As Play.HT puts it rather succinctly: “the more audio you provide the better the voice will be.”

One rule of thumb seems to be that audio quality is more important than quantity. A smaller sample of audio recorded on a decent mic in a closed-off room is going to make a better clone than a larger sample of audio that was recorded on your phone at a train station. Most of the companies that make voice clones recommend clean, high quality voice audio, without much or any background noise. Some prefer that any other speakers are edited out as well.

So, if attackers need somewhere around 30 minutes to 3 hours of reasonably high-quality voice recording to generate a voice clone, how many of us are at risk? Unfortunately, a lot.

According to a survey by Mcafee, 53% of all adults share their voice online at least once a week (and 49% share it somewhere between five and ten times). For many people, it’s easy enough to compile an hour or so of decent audio samples just through their TikTok or Instagram videos. If they have a podcast or YouTube channel, all the better.

For CEOs and other high-level employees, getting audio clips is likely to be even simpler thanks to interviews and recorded speeches.

One might hope that the voice cloning companies could be allies against the misuse of their products, and many of these services are adopting some kind of privacy or explicit consent model, having recognized the negative potential of unauthorized cloning. Unfortunately, there are just a whole lot of voice cloning services out there in the wild west of AI, many of whom make no mention at all of consent. Even the ones that do mention it aren’t particularly clear on what their consent process looks like.

For instance, Play.HT states: “We moderate every voice cloning request to ensure voices are never cloned on our platform unethically (without the consent of the voice owner).”

But talk is cheap (or has a 30 day free trial), and when the company’s “sample voices” include people like Obama, Kevin Hart, and JFK, such statements ring a little hollow. It’s hard to believe that all of those people (or their families) gave their explicit consent to be cloned.

How Susceptible Are We to Audio Deepfakes?

To be frank, clone quality aside, it doesn’t take that much to fool the human ear. The term “deepfake” in and of itself may create a false sense of confidence, since we associate it with online videos that we can usually tell are fakes (or like to think we can). And when we think of text-to-speech, we probably imagine the highly artificial voices of Alexa or Siri. But when it comes to audio-only deepfakes, the evidence indicates that humans just aren’t very good at telling the real person from the clone.

For instance, The Economist reported on how Taylor Jones, a linguist, found various statistical flaws in his voice clone, but none of them prevented the clone from fooling his own mother during a conversation.

Meanwhile, Timothy B. Lee ran a similar experiment for Slate, and found that “people who didn’t know me well barely did better than a coin flip, guessing correctly only 54 percent of the time.” (Lee’s mom was also fooled.)

Bear in mind that the people in Lee’s experiment were entirely at their leisure, comfortable at home, and explicitly knew to be looking for a clone. And a lot of them still couldn’t find it. Now consider that most people assume that a deepfake will automatically raise our “uncanny valley” hackles without us needing to look for it at all. That confidence makes us even more vulnerable.

Want to see if you can detect a voice deepfake? Lee’s experiment is still online, so you can try it for yourself. Just promise you’ll come back and read the rest of the blog!

How Can Audio Deepfakes Compromise Company Security?

In a social engineering attack like the one at Retool, bad actors will try to make people feel rushed and stressed out. They’ll target new hires who don’t know people well and are unlikely to detect something “off” in the voice of a coworker they only just met. And in a remote workplace, you can’t turn to the person in the next cubicle and ask what’s going on, how suspicious you should be about this call from IT, or whether your refrigerator is running.

A 2022 IBM report found that targeted attacks that integrate vishing (voice phishing) are three times more likely to be effective than those that didn’t. This makes sense when we consider how social engineering works. It preys human anxiety and urgency bias, as in the energy firm case, where, “The caller said the request was urgent, directing the executive to pay within an hour…”

Phone calls in and of themselves already make a lot of people anxious: 76% of Millenials and 40% of Baby Boomers, according to a 2019 survey of English office workers. And when that phone call is from our boss, it’s guaranteed to make us even more nervous.

Just imagine getting a phone call from your boss. Not an email or Slack message. A call. The assumption for a lot of people would be that something urgent, serious, or capital “b” Big was going on.

When used in conjunction with other phishing attacks, a phone call also seems to confirm legitimacy. (That’s in spite of the fact that phones are notoriously insecure–it’s trivially easy for a hacker to use CID spoofing to mask their real phone number.)

You get a weird text in isolation and might not think too much of it. You get a weird phone call in isolation and probably won’t answer it. But when a text is followed by a phone call, things feel more “real.” This is especially true when the bad actors have done their research and have enough information about the company to seem legitimate. We see this in the Retool attack, where the voice clone was just one of several methods used to build the sense of urgency and legitimacy required to get someone to slip up.

It’s also clear in the 2020 case of a Hong Kong branch manager who received a call from the director of his parent business. In this case, the course of the attack went:

The branch manager gets a call from the (deepfaked) director saying that they’ve made an acquisition and need to authorize some transfers to the tune of 35 million USD. They’d hired a certain lawyer to coordinate the process.

The branch manager receives emails impersonating that lawyer (the name the attackers used belongs to an experienced Harvard graduate). The emails provide a general rundown of the merger, with a description of what money needs to go where.

It all seems legit, so the manager authorizes the transfers.

This case is something of a middle ground between the Retool attack’s complexity and the energy firm attack’s simplicity. Make a call, follow-up with some emails, and everything seems kosher to move forward.

What about voice authentication?

We’ve established that it’s pretty easy to trick the human ear, but what about authentication systems that use voice as a form of biometrics? The verdict there is a bit more muddled.

Voice authentication systems, which are used by some banks, have been the subject of a lot of attention in voice clone discussion. And what research is out there indicates that they are potentially vulnerable to deepfakes. However, they’re hardly the biggest threat to security.

“‘While scary deepfake demos are a staple of security conferences, real-life attacks [on voice authentication software] are still extremely rare,’ said Brett Beranek, the general manager of security and biometrics at Nuance, a voice technology vendor that Microsoft acquired in 2021. ‘The only successful breach of a Nuance customer, in October, took the attacker more than a dozen attempts to pull off.’” -The New York Times, “Voice Deepfakes Are Coming for Your Bank Balance,” Aug 30, 2023

If your company uses voice authentication, then maybe reassess that and use a different biometric or hardware-based authentication method. When it comes to protecting the other vulnerabilities exploited by audio deepfakes, though, it gets a little more complicated.

How Can We Guard Against Voice Cloning Attacks?

To summarize: Audio deepfakes are becoming easier, cheaper, and more realistic than ever before. On top of that, humans (yes, you too) aren’t great at identifying them. In short, whatever else went on with Retool, the audio deepfake element of their story isn’t all that far-fetched.

So what can individuals and security teams do to guard against deepfakes?

There are plenty of solid methods that companies can take to add some security to high-impact or high-risk calls in which an employee is being asked to transfer money or share sensitive data.

Most of them are even pretty low-tech:

Integrate vishing into your company security training. Companies often overlook vishing, and employees need practice to recognize warning signs and think past that “urgency bias.” In trainings, remove “urgency” by giving employees explicit permission to always verify, verify, verify, even if it makes processes take a bit longer.

If there are real situations in your business where sensitive interactions happen over the phone, establish verbal passwords or code phrases. This is common advice to families worried about the uptick in “kidnapping” voice cloning scams.

-

Hang up.

a) No, you hang up! <3

But seriously. Hang up and call the person back. It’s unlikely that a hacking group has the power to reroute phone calls, unless there’s also a SIM swapping element to the attack. For high-stakes or unusual calls, establish as a best practice that recipients hang up, personally call the number on record for the person in question, and confirm the call and the request.

Finally, yes, there are audio deepfake detection tools coming out that promise the ability to detect fake voices (including offerings from the deepfake makers themselves). It’s certainly worth keeping an eye on these developments and considering how useful they may or may not be. Still, a tech solution focused only on deepfakes is not likely to be the most permanent or comprehensive solution for the broader problems that make vishing attacks successful.

De-fang deepfakes with Zero Trust

These are all protections against audio deepfakes specifically. But in sophisticated attacks, an audio clone probably won’t be the silver bullet that single-handedly conquers a company’s security. Compensating for them alone might not prevent an attack.

For instance, in the case of the MGM Casino Hack, “…it appears that the hackers found an employee’s information on LinkedIn and impersonated them in a call to MGM’s IT help desk to obtain credentials to access and infect the systems.” By all accounts, artificial intelligence wasn’t even necessary–just someone impersonating someone else the old fashioned way.

In cases like Retool and MGM Casinos, the goal isn’t a one-time money transfer but a foothold into your systems, with the potential for an even bigger payout in the end. To protect against these kinds of attacks, you need Zero Trust security to make sure that it’ll take more than a phished set of credentials to get into your apps.

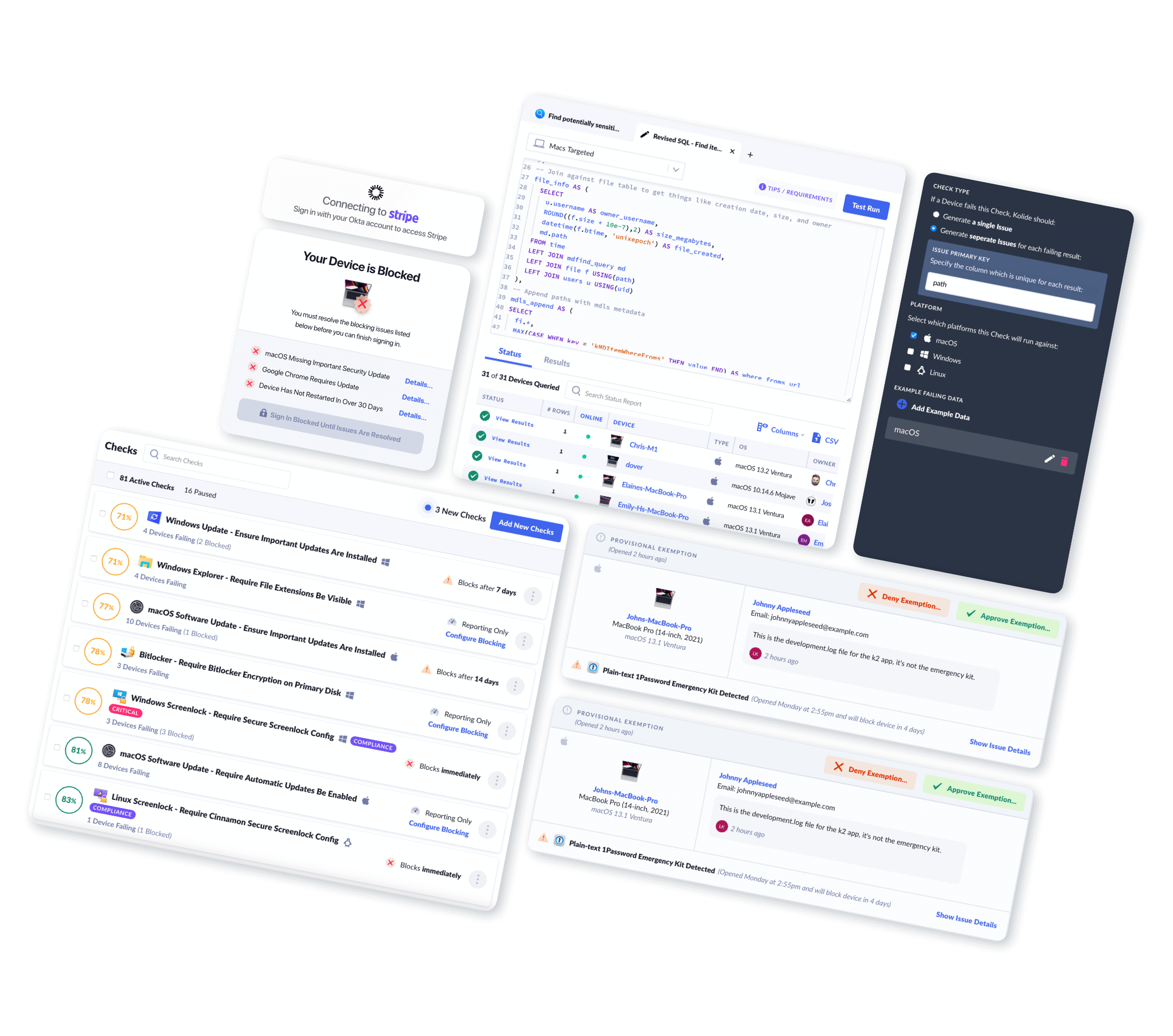

For instance (pitch time) if a company like Retool was using Kolide’s device trust solution, this one phone call may not have been quite so destructive.

As Retool describes that deepfaked phone call: “Throughout the conversation, the employee grew more and more suspicious, but unfortunately did provide the attacker one additional multi-factor authentication (MFA) code… The additional OTP token shared over the call was critical, because it allowed the attacker to add their own personal device to the employee’s Okta account.”

In a company using Kolide, however, the user would have needed to:

Gotten approval to add Kolide to a new device. With Kolide, no device can authenticate via Okta unless our agent is installed, which requires confirmation from the user (on their existing device) or an admin.

Meet eligibility requirements. Even if the user or admin did approve the registration prompt, the device would have still needed to pass device posture checks in order to authenticate. Usually, this would include checking that the device is enrolled in the company’s MDM, and can also include checking the device’s location and security posture.

Kolide is customizable when it comes to which checks a company requires. It’s possible that an organization might not require an MDM check, in which case the eventual MFA authentication still would have gone through. But with the right checks in place, this attack would have been much less likely, if not impossible.

With all that in mind, we do want to stress that no single solution can stop all social engineering attacks, and there are no technological substitutes for good employee training.

AI Voice Cloning Is a New Twist on an Old Trick

It’s no surprise that audio deepfaking is a headline-grabbing issue. It’s new, it’s high-tech, and it plays on some of our deepest fears about how easily AI can do things that once felt intrinsically human. Nonetheless, while voice cloning seems cutting edge, the principle behind it is as old as security itself. There’s a Saturday Morning Breakfast Cereal cartoon that describes this very phenomenon as early as 2012.

Audio deepfakes represent a significant risk, but it’s not a new risk. Voice cloning’s biggest power lies in the ways it can enhance the believability of vishing attacks. But that advantage disappears if employees are clear about what they should believe and listen for on a call.

Keeping teams educated and adding checks and stopgaps takes time, and that can be a tough sell, especially when there are AI vendors promising that their algorithm can do all the work for you. But to guard against social engineering attacks, security teams need to put as much focus on the human element as social engineers do. Adding a little time and friction are exactly what can make the difference between a “split-second mistake” and a “measured response.” Always give people the information they need to make the right call.

Want to read more security stories like this one? Subscribe to the Kolidescope newsletter!